Perform the following steps to connect to Sales and Service Cloud:

- Under the Setup gear, select Customer Data Cloud Setup.

- Under Configuration, select Salesforce CRM.

- Click Connect.

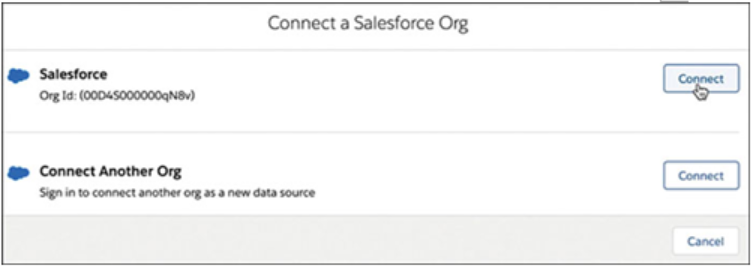

- You have the option to click Connect for the Salesforce org where Data Cloud is provisioned. You can also click Connect next to Connect Another Org (see Figure 6.9). Enter your user credentials to establish the connection with Data Cloud.

Figure 6.9: Connect Salesforce Orgs

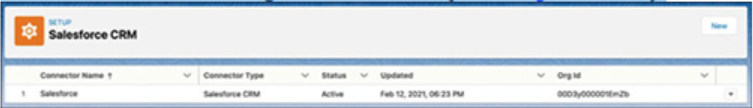

- Once you have your Salesforce org(s) connected, you can view the connection details from the Setup screen. Come back at any time to add additional orgs or to delete an existing connection (see Figure 6.10).

Figure 6.10: View connected CRM orgs

- In the same way, you can also connect your B2C Commerce Cloud or B2B Commerce Cloud. The B2C Commerce Cloud connector enables access to the customer profile and transactional (purchase and product) data from the B2C Commerce Cloud. This data enables enhanced insights and analytics use cases, and when combined with other data points, improves personalization opportunities. The B2C Commerce Cloud connector can connect one or more B2C Commerce production instances to a single CDP instance.

The prerequisites for this connection are as follows:

• The B2C Commerce instance should be implemented and owned by a customer.

• Commerce Einstein must be activated for data to flow from B2C Commerce to CDP.

• The user configuring the connection needs to have access to B2C Commerce Business Manager.

Connecting Amazon S3 Data Stream

To initiate the flow of data from an Amazon S3 source, follow these steps in Data Cloud:

- Set up a data stream in Data Cloud to enable data flow from an Amazon S3 source.

- Ensure that you understand the Data Stream Edit Settings and have configured the necessary S3 Bucket Permissions for Ingestion and Activation.

- In the Data Cloud interface, navigate to the Data Streams tab and click the New button. Alternatively, you can use the App Launcher to locate and select Data Streams.

- Choose Amazon S3 as the data source.

- Provide the credentials for accessing the S3 bucket and configure the source details as follows:

• S3 Bucket Name: Specify the name of the specific public cloud storage resource in AWS.

• S3 Access Key: Enter the programmatic username for API access to AWS.

• S3 Secret Key: Enter the programmatic password for API access to AWS.

• Directory: Specify the path name or folder hierarchy that leads to the physical location of the file.

• File Name: Provide the name of the file that needs to be retrieved from the specified directory. You can use wildcards like abc.csv to match files containing abc in their name. Each time the data stream runs, it imports all files that satisfy the wildcard pattern.

• Source: Indicate the external system from where the data is sourced. You can use the same source for multiple data streams. - Fill in the complete object details. You have two options:

• Create a data lake object (DLO) according to the specified naming standards.

• Use an existing DLO. In this case, make sure to familiarize yourself with the guardrails associated with using an existing DLO to create a data stream. - Review the table fields and make any necessary edits.

- Add new formula fields if required. You can map these fields to an existing DLO field or create a new DLO field. For more information, refer to the Formula Expression Library and Formula Expression Use Cases.

- Provide the deployment details and click the Deploy button.

- Click Start Data Mapping.

- Map your DLO to the semantic data model to utilize the data in segments, calculated insights, and other use cases.

There are many other data sources that can be connected to the Data Cloud, such as Azure storage, Google Cloud, web and mobile applications, and more. We will not be able to cover all of them in this book. You can refer to Salesforce Data Cloud documentation for more details on connecting different data sources with Data Cloud.